Paper, Dynamic obstacle avoidance for quadrotors with event cameras

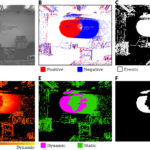

Today’s autonomous drones have reaction times of tens of milliseconds, which is not enough for navigating fast in complex dynamic environments. To safely avoid fast moving objects, drones need low-latency sensors and algorithms. We departed from state-of-the-art approaches by using event cameras, which are bioinspired sensors with reaction times of microseconds. Our approach exploits the temporal information contained in the event stream to distinguish between static and dynamic objects and leverages a fast strategy to generate the motor commands necessary to avoid the approaching obstacles. Standard vision algorithms cannot be applied to event cameras because the output of these sensors is not images but a stream of asynchronous events that encode per-pixel intensity changes. Our resulting algorithm has an overall latency of only 3.5 milliseconds, which is sufficient for reliable detection and avoidance of fast-moving obstacles. We demonstrate the effectiveness of our approach on an autonomous quadrotor using only onboard sensing and computation. Our drone was capable of avoiding multiple obstacles of different sizes and shapes, at relative speeds up to 10 meters/second, both indoors and outdoors.

Learn about our two Decals!

Click here to find out more about our Fall Bioinspired Design Decal and our Spring Bioinspired Design in Action Decal – ALL MAJORS are welcome.

Click here to find out more about our Fall Bioinspired Design Decal and our Spring Bioinspired Design in Action Decal – ALL MAJORS are welcome.Berkeley BioDesign Community

Click here to learn about the BioD: Bio-Inspired Design @ Berkeley student organization or here to signup for more info.

Click here to learn about the BioD: Bio-Inspired Design @ Berkeley student organization or here to signup for more info.Search

Student Login

I imagine that the neurological circuits underlying these processes are governed by both 2d spacing maps with their brains as…

to reduce the impact of car accidents, it may be possible to study the force diverting physics of cockroaches to…

you see this type of head-bobbing stability in many avian creatures related to pigeons like chickens. the head ability to…

not like they taught horses how to run! this is an example of convergent evolution where both sea creatures and…

The brain functions in a similar way with neuronal connections. our brains are able to utilize the multiplicity of connections…